Run Llama 2 Chat Models On Your Computer By Benjamin Marie Medium

To run LLaMA-7B effectively it is recommended to have a GPU with a minimum of 6GB. If the 7B Llama-2-13B-German-Assistant-v4-GPTQ model is what youre. I ran an unmodified llama-2-7b-chat 2x E5-2690v2 576GB DDR3 ECC RTX A4000 16GB Loaded in 1568 seconds used about 15GB of VRAM and 14GB of system memory above the. What are the minimum hardware requirements to run the models on a local machine Llama2 7B Llama2 7B-chat Llama2 13B Llama2. Windows 10 with NVidia Studio drivers 52849 Pytorch 1131 with CUDA 117 installed with..

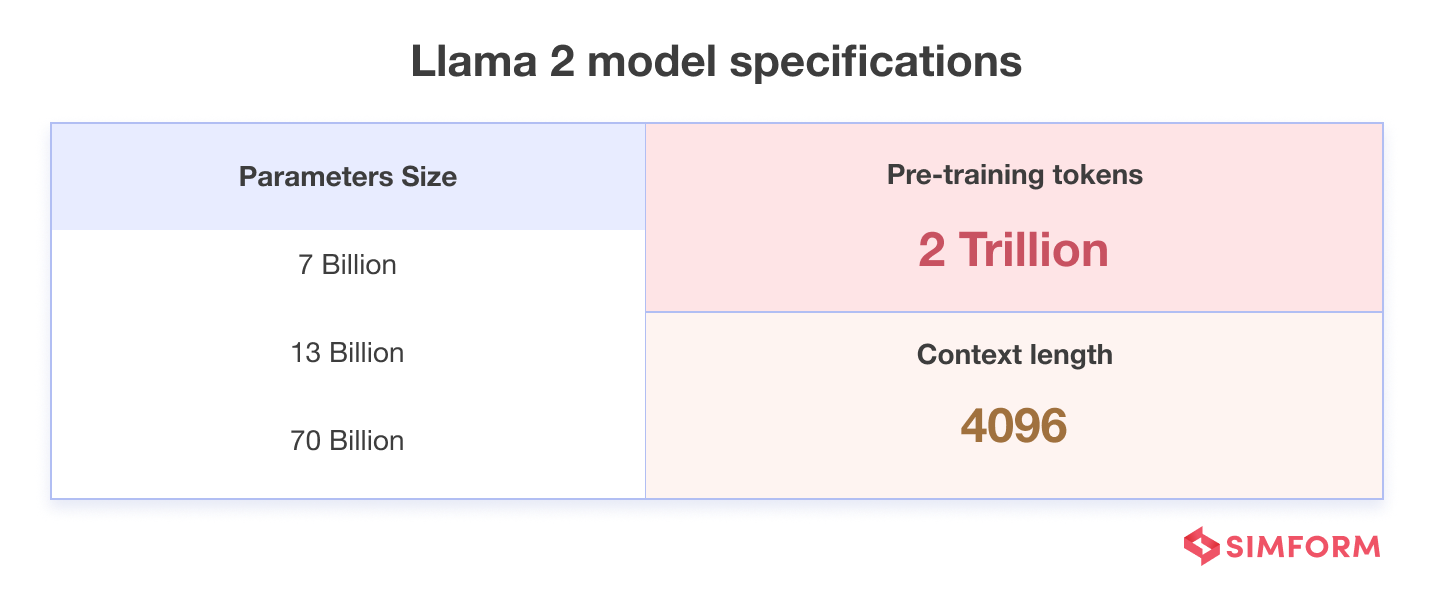

In this work we develop and release Llama 2 a collection of pretrained and fine-tuned large language models LLMs ranging in scale from 7 billion to 70. In this work we develop and release Llama 2 a family of pretrained and fine-tuned LLMs Llama 2 and Llama 2-Chat at scales up to 70B parameters On the series of helpfulness and safety. . . We release Code Llama a family of large language models for code based on Llama 2 providing state-of-the-art performance among open models..

Llama 2 is a family of state-of-the-art open-access large language models released by Meta. The base models are initialized from Llama 2 and then trained on 500 billion tokens of code data. One of the best ways to try out and integrate with Code Llama is using Hugging Face ecosystem by following..

Llama 2 70B Clone on GitHub Customize Llamas personality by clicking the settings button I can explain concepts write poems and code solve logic puzzles or even name your pets. Llama 2 70B online AI technology accessible to all Our service is free If you like our work and want to support us we accept donations Paypal. Experience the power of Llama 2 the second-generation Large Language Model by Meta Choose from three model sizes pre-trained on 2 trillion tokens and fine-tuned with over a million human. Llama 2 is a collection of pretrained and fine-tuned generative text models ranging in scale from 7 billion to 70 billion parameters This is the repository for the 70B pretrained model. This release includes model weights and starting code for pretrained and fine-tuned Llama language models Llama Chat Code Llama ranging from 7B to 70B parameters..

Tidak ada komentar :

Posting Komentar